About

OpenEarth (www.openearth.nl) is a free and open source initiative to deal with data, models and tools in earth science & engineering projects. In current practice, research, consultancy and construction projects commonly spend a significant part of their budget to setup some basic infrastructure for data and knowledge management. Most of these efforts disappear again once the project is finished. As an alternative to these ad-hoc approaches, OpenEarth aims for a more continuous and cumulative approach to data & knowledge management. OpenEarth promotes, teaches and sustains project collaboration and skill sharing. As a result, research and consultancy projects no longer need to waste valuable resources by repeatedly starting from scratch. Rather they can build on the preserved efforts from countless projects before them.

Over many years Delft University of Technology and Deltares, together developed OpenEarth as a free and open source alternative to the project-by-project and institution-by-institution approaches to deal with data, models and tools (e.g Van Koningsveld et al. 2004). OpenEarth at its most abstract level represents the philosophy that data, models and tools should flow as freely and openly as possible across the artificial boundaries of projects and organizations (or at least departments). Put in practice OpenEarth exists only because there is a robust user community that works according to this philosophy (a bottom up approach). In its most concrete and operational form OpenEarth facilitates collaboration within its user community by providing an open ICT infrastructure, built from the best available open source components, in combination with a well-defined workflow, described in open protocols based as much as possible on widely accepted international standards.

OpenEarth as a whole (philosophy, user community, infrastructure and workflow) is the first comprehensive approach to handling data, models and tools that actually works in practice at a truly significant scale. It is implemented not only at its originally founding organizations Delft University of Technology and Deltares but also in a number of research programs with multiple partners. As a result OpenEarth is now carried by a rapidly growing user community that currently consists of more than 1000 users, of which over 100 developers made over 10.000 contributions. This community originates from tens of organizations from multiple countries. Together they share and co-develop thousands of tools, tera-bytes of data and numerous models (source code as well as model schematisations).

The OpenEarth infrastructure and workflow

Improper management of data, models and tools can easily result in a wide range of very recognizable frustrations:

- Accidentally using older versions of data, models or tools

- Not knowing where the most recent version is and what its status is

- Making the same mistake twice due to lack of control over versions

- Losing important datasets that are extremely hard to replace

- Uncertainty as to what quantities have been measured and which units apply

- Uncertainty as to the geographical location of measurements

- Uncertainty as to the time and time zone the measurements were taken in

- Lack of insight in the approach taken and the methods used

- A wide range of formats of incoming (raw) data

- Getting the feeling that a certain issue must have been addressed before by another analyst

- Running into a multitude of tools for the same thing

- Running into a multitude of databases each in its own language and style

Although the above-described frustrations are very common throughout the hydraulic engineering industry, no practical and widely accepted remedy used to be available. Since 2003, OpenEarth is developed to fill this gap providing an infrastructure to support a bottom-up approach for long-term project-transcending collaboration adhering to four basic criteria:

- Open standards & open source – The infrastructure should be based on open standards, not require nonlibre software and be transferable

- Complete transparency – The collected data should be reproducible, unambiguous and self-descriptive.

Tools and models should be open source, well documented and tested - Centralised web-access – The collection and dissemination procedure for data, models and tools should be web-based and centralised to maximize access, promote collaboration and impede divergence.

- Clear ownership and responsibility – Although data, models and tools are collected and disseminated via the OpenEarth infrastructure, the responsibility for the quality of each product remains at its original owner.

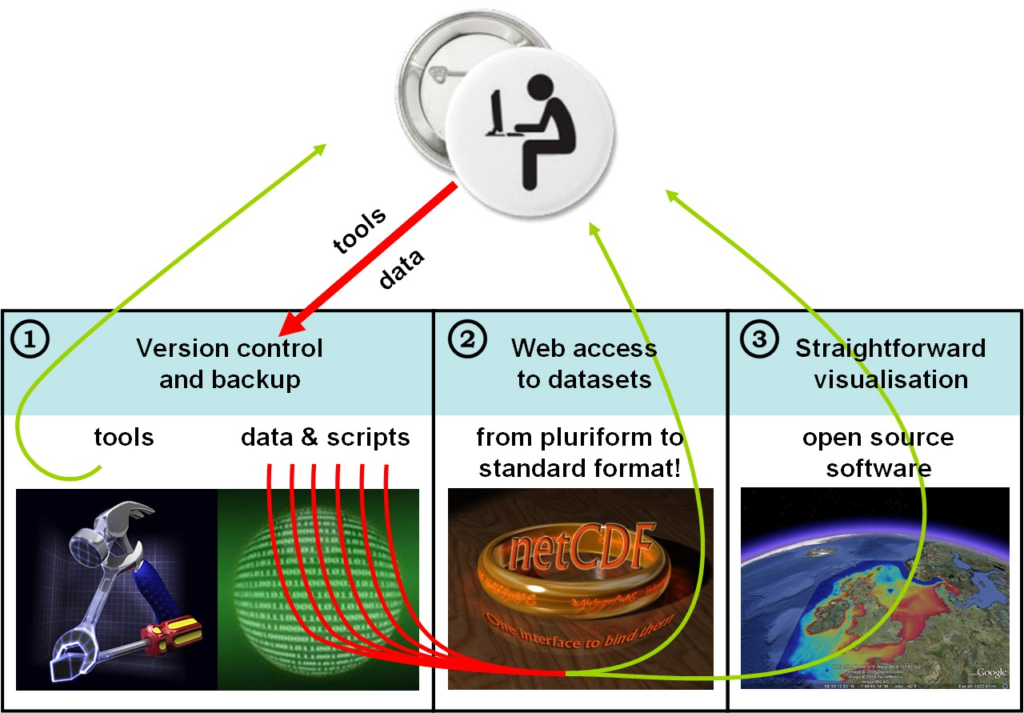

The heart of the OpenEarth infrastructure is formed by three kinds of web-services (as also shown in the figure above):

- a web service for version control and back-up of raw data, model schematisations and computer code.

- a web service for accessing data numbers, and

- a web service for accessing data graphics,

Once data, models and tools are stored according to the OpenEarth principles, it becomes quite straightforward to develop specific tools that address a particular user need. Examples of such applications developed within Building with Nature are:

Usage skills

OpenEarth is useful to various actors in different roles in the science and engineering context. Here we discuss the required usage skills for:

- project engineers/hands-on researchers,

- project leaders/principal investigators and program managers.

Project engineers/hands-on researchers

OpenEarth is primarily meant for those project engineers/hands-on researchers who analyse data and model outputs on a regular basis.

Data examples are elevation recordings and bathymetry soundings, counts of species, remote sensing imagery and in situ measurements with buckets, ADCP, OBS, conductivity sensor, thermometer, waverider. Model examples include Delft3D, Delwaq, SWAN, ASMITA, Unibest and XBeach.

The usage skills required for project engineers/hands-on researchers are that they have to learn about version control and about some international standards for exchange of data over the web. These can simply be incorporated in their existing workflows, so they can keep using the skills that they already have, whether it is Matlab, python, R, ArcGIS. The reason is that OpenEarth is simply a workflow for embedding existing processing into an overall framework for collaboration. OpenEarth selected the most promising standards that have been implemented worldwide for raw data, standard data, tailored data, graphics of data and meta-data of data . For these standards dedicated tutorials have been compiled for the most common general analysis tools in collaboration with other institutes: Matlab (IHE), python (TUD), R (IMARES), Delft3D, OPeNDAP (KNMI, Rijkswaterstaat), WxS (KNMI). In addition, a few times per year free hands-on courses, so called sprint sessions, are organized. The aim is that within one day participants can work with these international standards with their own data, using their own favourite analysis tool. These standards are gradually made compulsory at EU level by the INSPIRE legislation.

Project leaders/principal investigators and program managers

Project leaders/principal investigators and program managers can also adopt OpenEarth in their project. The only skill they need is that when they compose a project team, they manage to finance enough manpower in expertise in standardisation and automation of data and model processing. And that they show enough leadership to enforce use of it. Experts tend to use artisan approaches that are tailored to their specific needs, hampering exchange between different disciplines in projects. In addition, they hardly guarantee provenance of their data. This has allowed many debacles to occur like with the IPCC (Intergovernmental Panel on Climate Change) data or the wide-spread fraud of a Nijmegen professor. The lack of proper control of automation in science has been discussed in a recent Nature article. OpenEarth offers a complete workflow to circumvent these issues. Project leaders can therefore force their project teams to adopt common standards, following the consensus approach of OpenEarth. In a sense project leaders/principal investigators and program managers are therefore the most influential users of OpenEarth, without having to know about the details of the standards.

Building with Nature interest

The Data and Knowledge Management part of BwN adopted the infrastructure and standards made available by OpenEarth. Benefit of joining the OpenEarth initiative is that previously uploaded datasets as well as the hundreds of tools that have already been developed in other projects will become available to the BwN partners (all routines in OpenEarth are open source, distributed under the GNU Lesser General Public License conditions). Furthermore an active international community of practice is already established, facilitating optimal use of available resources. Training in the use of the available tools is given on a regular basis and can easily be extended to the BwN community.

Important for the BwN program is to establish maximum overlap between on-going activities related to data, model and tool storage and dissemination conforming as much as possible to existing standards (adopting successes of previous projects) on the one hand while striving for maximum usability (avoid pitfalls of previous projects) on the other. Data is put under version control, converted to a commonly used data standard (NetCDF for grids or PostgreSQL for biodiversity data) and disseminated through a web-based data server (OPeNDAP). Models are put under version control, stored and disseminated through a repository. Tools, also put under version control, are shared by creating a common toolbox that can be used by all partners.